Welcome to AstroMLab

Who We Are

AstroMLab is a dynamic group of astrophysicists and computer scientists developing Large Language Models (LLMs) for astronomy. Our team includes:

- Leading astronomers, astrophysicists, and cosmologists

- Top natural language processing experts from Oak Ridge National Laboratory and Argonne National Laboratory

- Frontier arXivists from the NASA Astrophysics Data System

- Enthusiastic young researchers bridging astronomy and LLMs

Our Goals

- Develop specialized LLMs for astronomy

- Create reliable, light-weight, and open-source models for research agents

- Expedite scientific discovery through LLM-driven research

- Push the boundaries of astronomical research

Our Outputs

We've achieved:

- The first astronomy-based benchmarking dataset (Ting et al. 2024)

- Released five model sets:

- AstroSage-LLaMA-3.1-70B (de Haan et al. 2025b)

- AstroSage-LLaMA-3.1-8B (de Haan et al. 2025a)

- AstroLLaMA-2-70B (Pan et al. 2024)

- AstroLLaMA-3-8B (Pan et al. 2024)

- AstroLLaMA-2-7B (Perkowski et al. 2024, Nguyen et al. 2023)

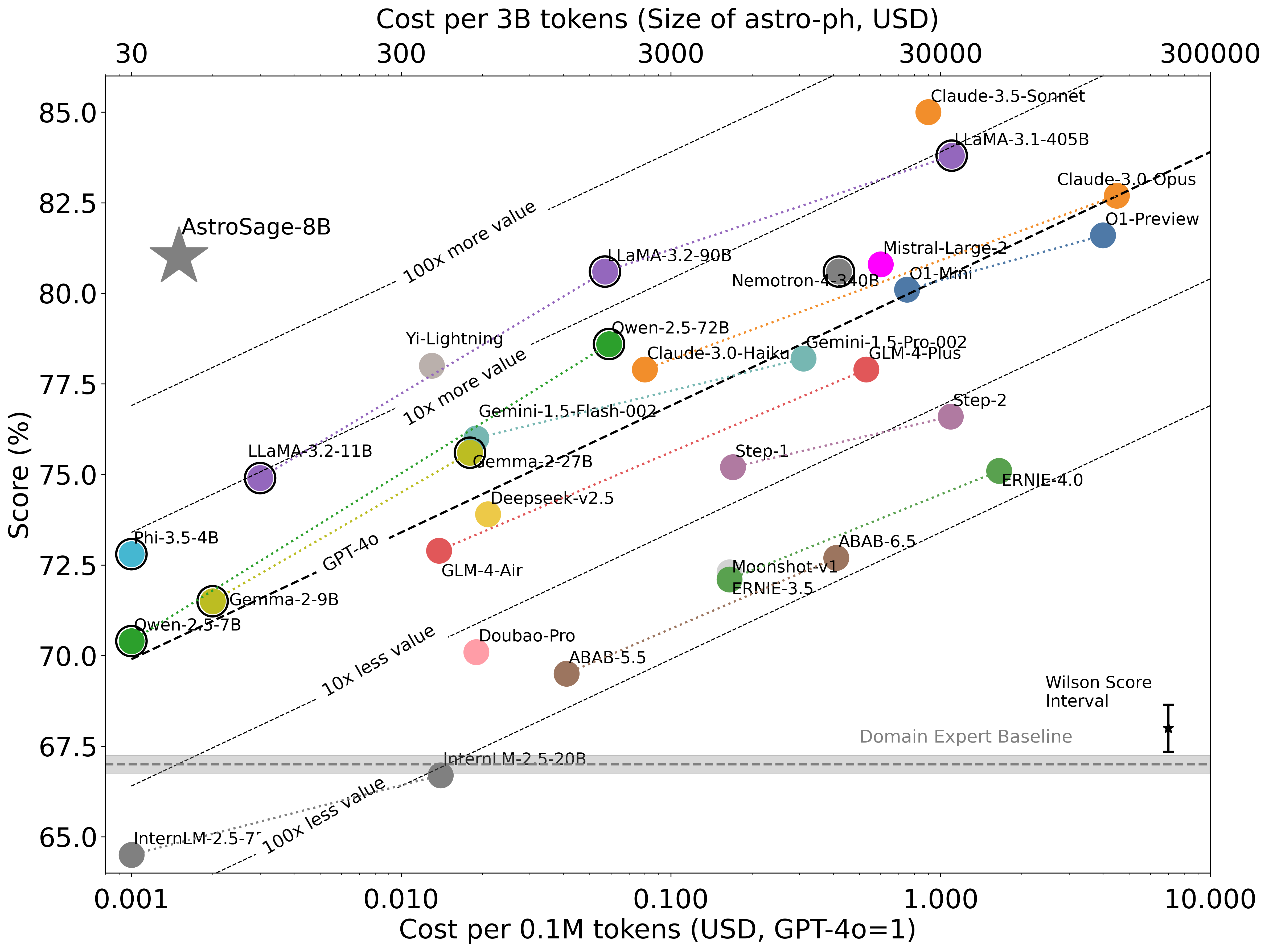

Our flagship models, AstroSage-LLaMA-3.1-70B and AstroSage-LLaMA-3.1-8B, achieve 86.2% and 80.9% accuracy respectively on the AstroMLab-1 benchmark. The 70B model ties with Claude-4-Opus for the highest performance, while the 8B model performs comparably to Mistral-Large-v2 at a fraction of the cost (see AstroBench).

| Model | Score (%) |

|---|---|

| AstroSage-LLaMA-3.1-70B (AstroMLab) | 86.2 |

| Claude-4-Opus | 86.3 |

| o3 | 85.4 |

| Claude-4-Sonnet | 85.0 |

| GPT-4.1 | 84.7 |

| o4-Mini | 84.7 |

| Gemini-2.5-Pro | 84.8 |

| Deepseek-R1 | 84.4 |

| Qwen-3-235B | 84.0 |

| LLaMA-4-Maverick | 83.4 |

| Deepseek-v3-2503 | 82.9 |

| Gemini-2.5-Flash-0520 | 82.3 |

| LLaMA-4-Scout | 82.2 |

| Grok-3 | 81.7 |

| Mistral-Medium-v3 | 81.8 |

| AstroSage-LLaMA-3.1-8B (AstroMLab) | 80.9 |

| Mistral-Large-v2 | 80.8 |

| Qwen-3-32B | 79.7 |

| Mistral-Small-v3.1 | 78.6 |

| GPT-4.1-Nano | 78.0 |

| Gemini-2-Flash-Lite | 78.4 |

| Gemma-3-27B | 76.9 |

| Qwen-3-14B | 76.4 |

| AstroLLaMA-2-7B | 44.3 |

Open Source Commitment

All our models are available on Hugging Face

Our Support

- Access to Frontier nodes at Oak Ridge Leadership Computing Facility

- Microsoft’s Accelerating Foundation Models Research (AFMR)

Join Us

Contact us: astromachinelearninglab@gmail.com

Team

|

|

|

|

|

| Yuan-Sen Ting The Ohio State University |

Tirthankar Ghosal Oak Ridge National Laboratory |

Tijmen de Haan KEK |

Josh Nguyen University of Pennsylvania |

|

|

|

|

|

|

| Rui Pan University of Illinois Urbana-Champaign |

Hardik Arora Indian Institutes of Technology |

Emily Herron Oak Ridge National Laboratory |

Yuwei Yang Australian National University |

|

|

|

|

|

|

| Zechang Sun Tsinghua University |

Alberto Accomazzi NASA Astrophysics Data System |

Azton Wells Argonne National Laboratory |

Nesar Ramachandra Argonne National Laboratory |

|

|

||||

| Sandeep Madireddy Argonne National Laboratory |

Publications

AstroMLab 4: Benchmark-Topping Performance in Astronomy Q&A with a 70B-Parameter Domain-Specialized Reasoning Model

We present AstroSage-LLaMA-3.1-70B, a 70-billion parameter domain-specialized language model that achieves state-of-the-art performance on astronomical knowledge tasks. Built from Meta-Llama-3.1-70B through extensive continued pre-training on astronomical literature, supervised fine-tuning, and model merging, it demonstrates that domain specialization can enable specialized models to outperform even the most advanced commercial alternatives.

Key points:

- Achieved 86.2% accuracy on Astrobench, outperforming all tested models including o3, GPT-4.1, Claude-3.7-Sonnet, and Gemini-2.5-Pro

- Demonstrates ~100× improvement in cost-efficiency compared to achieving similar performance with general-purpose models

- Incorporates explicit reasoning capabilities that can be activated for complex multi-step astronomical analysis

- Openly available under Llama 3.1 Community License to accelerate AI adoption in astronomy research and education

AstroMLab 3: Achieving GPT-4o Level Performance in Astronomy with a Specialized 8B-Parameter Large Language Model

We present AstroSage-LLaMA-3.1-8B, a domain-specialized natural-language AI assistant tailored for research in astronomy, astrophysics, and cosmology. Through extensive data curation, massive continued pre-training, and supervised fine-tuning, we demonstrate that proper specialization of a relatively small model can achieve performance comparable to much larger flagship models.

Key points:

- Achieved 80.9% accuracy on the AstroMLab-1 benchmark, performing on par with GPT-4o while using only 8B parameters

- Demonstrated an 8-point improvement over the base LLaMA-3.1-8B model through domain specialization

- Maintained strong general capabilities in reasoning, mathematics, and coding despite optimization for astronomy

- Combined continued pre-training, supervised fine-tuning, and model merging techniques to enhance both domain expertise and instruction-following capabilities

AstroMLab 2: AstroLLaMA-2-70B Model and Benchmarking Specialised LLMs for Astronomy

Rui Pan, Josh Nguyen, et al., 2024

We introduce new models: AstroLLaMA-3-8B and AstroLLaMA-2-70B, building upon the previous AstroLLaMA series and quantitatively assess specialized LLMs in astronomy, leveraging recently curated high-quality astronomical MCQs.

Key points:

- Previously released AstroLLaMA series (based on LLaMA-2-7B) underperforms compared to the native LLaMA model.

- Performance degradation can be partially mitigated by using high-quality data for continual pretraining.

- Continual pretraining on the 70B model can yield improvements, despite observed catastrophic forgetting in smaller models.

AstroMLab 1: Who Wins Astronomy Jeopardy!?

Yuan-Sen Ting, et al., 2024, arXiv:2407.11194

We present a comprehensive evaluation of proprietary and open-weights large language models using the first astronomy-specific benchmarking dataset. This dataset comprises 4,425 multiple-choice questions curated from the Annual Review of Astronomy and Astrophysics, covering a broad range of astrophysical topics.

Key findings:

- Claude-3.5-Sonnet outperforms competitors, achieving 85.0% accuracy.

- Open-weights models like LLaMA-3-70b (80.6%) and Qwen-2-72b (77.7%) now compete with some of the best proprietary models.

- We identify performance variations across astronomical subfields, with challenges in exoplanet-related fields, stellar astrophysics, and instrumentation.

- Top-performing models demonstrate well-calibrated confidence, with correlations above 0.9 between confidence and correctness.

- The rapid progress suggests that LLM-driven research in astronomy may become feasible in the near future.

Legacy Output: The AstroLLaMA Series

- Josh Nguyen, et al., 2023, arXiv:2309.06126

- Ernest Perkowski, Rui Pan, et al., 2024, arXiv:2401.01916

The first open-source conversational AI tool tailored for the astronomy community – AstroLLaMA-2-7B and AstroLLaMA-2-7B-Chat.